Disagreements Accurate Answers to Challenging Factual Questions

Create and release your Profile on Zintellect – Postdoctoral applicants must create an account and complete a profile in the on-line application system. Please note: your resume/CV may not exceed 2 pages.

Complete your application – Enter the rest of the information required for the IC Postdoc Program Research Opportunity. The application itself contains detailed instructions for each one of these components: availability, citizenship, transcripts, dissertation abstract, publication and presentation plan, and information about your Research Advisor co-applicant.

Additional information about the IC Postdoctoral Research Fellowship Program is available on the program website located at: https://orise.orau.gov/icpostdoc/index.html.

If you have questions, send an email to ICPostdoc@orau.org. Please include the reference code for this opportunity in your email.

Research Topic Description, including Problem Statement:

Recent research found two rapid, reliable ways to increase the accuracy of answers to challenging factual questions, particularly when experts disagree: (1) Aggregation of answers from individuals working independently. One study (Kosinski, 2012) began with answers to an IQ test taken by individuals with an average IQ of 120. Mechanically selecting the most common answer to each question produced a “group IQ” of about 150. (2) Brief discussion between two people who disagree on the answer. In one study by Chen (2019) used this method to increase the rate of correct answers from 67% to 98.8%. Research is needed to develop an effective technique for combining aggregation of answers with small-group discussion. Any technique should be tested on increasingly difficult questions starting with multiple-choice answers provided along with the questions, then developing a way to apply the technique to non-multiple-choice questions. Initial answers from many informed participants could produce a set of plausible multiple-choice answers to each question and a technique to be applied to those questions. Any developed technique should be easy and natural for busy professionals to use on the job. It should require no formal training or technical knowledge. It should be effective on a wide variety of factual questions, including predictions.

Example Approaches:

- Develop statistically sophisticated aggregation techniques using only participants’ answers (not ground truth) to determine the conditions in which participants are likely to do well or poorly on a question. Use that information to detect when the plurality answer is likely to be wrong.

- Develop more-effective discussion techniques, e.g., by making it easier for participants to acknowledge that their initial answer was likely mistaken.

- In addition to asking participants for the correct answer, ask them what the most popular answer is likely to be. Aggregate these two sources of information to increase accuracy.

- Increase accuracy by having participants state their reasons, not just their answers, and invite other participants to rate or comment on the reasons.

Relevance to the Intelligence Community:

Intelligence analysis often involves making judgments on factual questions. An effective method to help analysts perform this task quickly and accurately will strengthen intelligence analysis and provide better support for policy-makers.

References:

- Kosinski, M., Y. Bachrach, G. Kasneci, J. Van Gael, & T. Graepel (2012). Crowd IQ: measuring the intelligence of crowdsourcing platforms. Proceedings of the 4th Annual ACM Web Science Conference.

- Bachrach, Y., T. Graepel, G. Kasneci, M. Kosinski, & J. Van Gael (2012). Crowd IQ: aggregating opinions to boost performance. Proceedings of the 11th International Conference on Autonomous Agents and Multiagent Systems.

- Mercier, H. & D. Sperber (2017). The enigma of reason. Harvard University Press.

- Chen, Q., J. Bragg, L. Chilton & D. Weld (2019). Cicero: multi-turn, contextual argumentation for accurate crowdsourcing. CHI 2019.

- Drapeau, R., L. Chilton, J. Bragg, & D. Weld (2016). MicroTalk: Using argumentation to improve crowdsourcing accuracy. Proceedings of the Fourth AAAI Conference on Human Computation and Crowdsourcing.

- Schaekermann, M., J. Goh, K. Larson, & E. Law (2018). Resolvable vs. irresolvable disagreement: a study on worker deliberation in crowdwork. Proceedings of the ACM on Human-Computer Interaction.

Key Words: Aggregation of Opinion, Crowdsourcing, Reasoning, Expert Elicitation, Human-Computer Interaction

Postdoc Eligibility

- U.S. citizens only

- Ph.D. in a relevant field must be completed before beginning the appointment and within five years of the application deadline

- Proposal must be associated with an accredited U.S. university, college, or U.S. government laboratory

- Eligible candidates may only receive one award from the IC Postdoctoral Research Fellowship Program

Research Advisor Eligibility

- Must be an employee of an accredited U.S. university, college or U.S. government laboratory

- Are not required to be U.S. citizens

- Citizenship: U.S. Citizen Only

- Degree: Doctoral Degree.

-

Discipline(s):

- Chemistry and Materials Sciences (12 )

- Communications and Graphics Design (2 )

- Computer, Information, and Data Sciences (16 )

- Earth and Geosciences (21 )

- Engineering (27 )

- Environmental and Marine Sciences (14 )

- Life Health and Medical Sciences (45 )

- Mathematics and Statistics (10 )

- Other Non-Science & Engineering (2 )

- Physics (16 )

- Science & Engineering-related (1 )

- Social and Behavioral Sciences (27 )

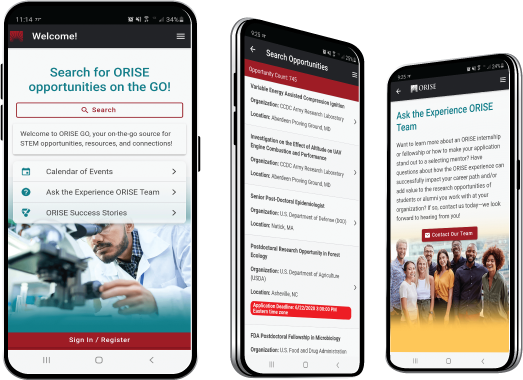

ORISE GO

ORISE GO

The ORISE GO mobile app helps you stay engaged, connected and informed during your ORISE experience – from application, to offer, through your appointment and even as an ORISE alum!